“No Duh,” say senior developers everywhere.

The article explains that vibe code often is close, but not quite, functional, requiring developers to go in and find where the problems are - resulting in a net slowdown of development rather than productivity gains.

https://www.youtube.com/watch?v=VsE0BwQ3l8U&t=1492s

And the band plays on

I code with LLMs every day as a senior developer but agents are mostly a big lie. LLMs are great for information index and rubber duck chats which already is incredible feaute of the century but agents are fundamentally bad. Even for Python they are intern-level bad. I was just trying the new Claude and instead of using Python’s pathlib.Path it reinvented its own file system path utils and pathlib is not even some new Python feature - it has been de facto way to manage paths for at least 3 years now.

That being said when prompted in great detail with exact instructions agents can be useful but thats not what being sold here.

After so many iterations it seems like agents need a fundamental breakthrough in AI tech is still needed as diminishing returns is going hard now.

Oh yes. The Great

pathlib. The Blessedpathlib. Hallowed be it and all it does.I’m a Ruby girl. A couple of years ago I was super worried about my decision to finally start learning Python seriously. But once I ran into

pathlib, I knew for sure that everything will be fine. Take an everyday headache problem. Solve it forever. Boom. This is how standard libraries should be designed.I disagree. Take a routine problem and invent a new language for it. Then split it into various incompatible dialects, and make sure in all cases it requires computing power that no one really has.

LLMs work great to ask about tons of documentation and learn more about high-level concepts. It’s a good search engine.

The code they produce have basically always disappointed me.

On proprietary products, they are awful. So many hallucinations that waste hours. A manager used one on a code review of mine and only admitted it after I spent the afternoon chasing it.

I sometimes get up to five lines of viable code. Then upon occasion what should have been a one liner tries to vomit all over my codebase. The best feature about AI enabled IDE is the button to decline the mess that was just inflicted.

In the past week I had two cases I thought would be “vibe coding” fodder, blazingly obvious just tedious. One time it just totally screwed up and I had to scrap it all. The other one generated about 4 functions in one go and was salvageable, though still off in weird ways. One of those was functional, just nonsensical. It had a function to check whether a certain condition was present or not, but instead of returning a boolean, it passed a pointer to a string and set the string to “” to indicate false… So damn bizarre, hard to follow and needlessly more lines of code, which is another theme of LLM generated code.

Im not super surprised, but AI has been really useful when it comes to learning or giving me a direction to look into something more directly.

Im not really an advocate for AI, but there are some really nice things AI can do. And i like to test the code quality of the models i have access to.

I always ask for a ftp server and dns server, to check what it can do and they work surprisingly well most of the time.

But will something be done about it?

NOooOoOoOoOoo. As long as it is still the new shiny toy for techbros and executive-bros to tinker with, it’ll continue.

AI coding is the stupidest thing I’ve seen since someone decided it was a good idea to measure the code by the amount of lines written.

More code is better, obviously! Why else would a website to see a restaurant menu be 80Mb? It’s all that good, excellent code.

I use AI as an entryway to learning or for finding the name or technique that I’m thinking of but can’t remember or know it’s name so then i can look elsewhere for proper documentation. I would never have it just blindly writing code.

Sadly search engines getting shitter has sort of made me have to use it to replace them.

Then it’s also good to quickly parse an error for anything obviously wrong.

it’s slowing you down. The solution to that is to use it in even more places!

Wtf was up with that conclusion?

I don’t think it’s meant to be a conclusion. The article serves as a recap of several reports and studies about the effectivity of LLMs with coding, and the final quote from Bain & Company was a counterpoint to the previous ones asserting that productivity gains are minimal at best, but also that measuring productivity is a grey area.

I have been vibe coding a whole game in JavaScript to try it out. So far I have gotten a pretty ok game out of it. It’s just a simple match three bubble pop type of thing so nothing crazy but I made a design and I am trying to implement it using mostly vibe coding.

That being said the code is awful. So many bad choices and spaghetti code. It also took longer than if I had written it myself.

So now I have a game that’s kind of hard to modify haha. I may try to setup some unit tests and have it refactor using those.

Wait, are you blaming AI for this, or yourself?

Blaming? I mean it wrote pretty much all of the code. I definitely wouldn’t tell people I wrote it that way haha.

Sounds like vibecoders will have to relearn the lessons of the past 40 years of software engineering.

As with every profession every generation… only this time on their own because every company forgot what employee training is and expects everyone to be born with 5 years of experience.

Imagine if we did “vibe city infrastructure”. Just throw up a fucking suspension bridge and we’ll hire some temps to come in later to find the bad welds and missing cables.

Even though this shit was apparent from day fucking 1, at least the Tech Billionaires were able to cause mass layoffs, destroy an entire generation of new programmers’ careers, introduce an endless amount of tech debt and security vulnerabilities, all while grifting investors/businesses and making billions off of all of it.

Sad excuses for sacks of shit, all of them.

Look on the bright side, in a couple of years they will come crawling back to us, desperate for new things to be built so their profit machines keep profiting.

Current ML techniques literally cannot replace developers for anything but the most rudimentary of tasks.

I wish we had true apprenticeships out there for development and other tech roles.

Glad someone paid a bunch of worthless McKinsey consultants what I could’ve told you myself

It is not worthless. My understanding is that management only trusts sources that are expensive.

Yep, going through that at work, they hired several consultant companies and near as I can tell, they just asked employees how the company was screwing up, we largely said the same things we always say to executives, they repeated them verbatim, and executives are now praising the insight on how to fix our business…

No shit sherlock!

The most immediately understandable example I heard of this was from a senior developer who pointed out that LLM generated code will build a different code block every time it has to do the same thing. So if that function fails, you have to look at multiple incarnations of the same function, rather than saying “oh, let’s fix that function in the library we built.”

Yeah, code bloat with LLMs is fucking monstrous. If you use them, get used to immediately scouring your code for duplications.

Yeah if I use it and it generatse more than 5 lines of code, now I just immediately cancel it out because I know it’s not worth even reading. So bad at repeating itself and falling to reasonably break things down in logical pieces…

With that I only have to read some of it’s suggestions, still throw out probably 80% entirely, and fix up another 15%, and actually use 5% without modification.

There are tricks to getting better output from it, especially if you’re using Copilot in VS Code and your employer is paying for access to models, but it’s still asking for trouble if you’re not extremely careful, extremely detailed, and extremely precise with your prompts.

And even then it absolutely will fuck up. If it actually succeeds at building something that technically works, you’ll spend considerable time afterwards going through its output and removing unnecessary crap it added, fixing duplications, securing insecure garbage, removing mocks (God… So many fucking mocks), and so on.

I think about what my employer is spending on it a lot. It can’t possibly be worth it.

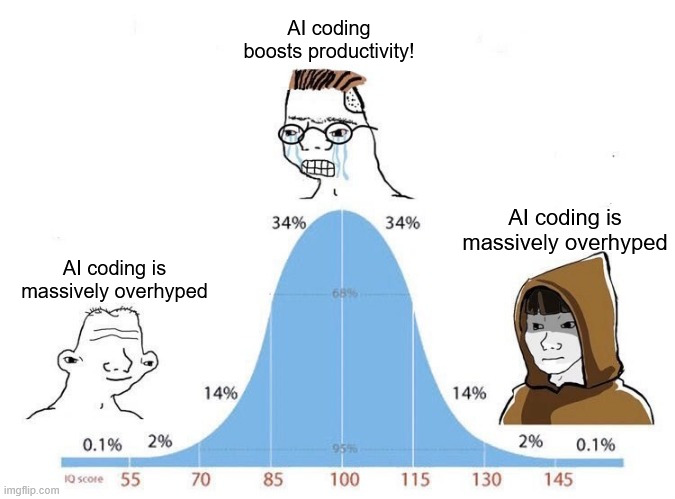

“No Duh,” say senior developers everywhere.

I’m so glad this was your first line in the post

No duh, says a layman who never wrote code in his life.

Oddly enough, my grasp of coding is probably the same as the guy in the middle but I still know that LLM generated code is garbage.

Yeah, I actually considered putting the same text on all 3, but we gotta put the idiots that think it’s great somewhere! Maybe I should have put it with the dumbest guy instead.

Think this one needs a bimodal curve with the two peaks representing the “caught up in the hype” average coder and the realistic average coder.

Yeah, there’s definitely morons out there who never bothered to even read about the theory of good code design.